Published on February 01, 2023

Table of Contents

Introduction

This blog deep dives into a TLS connection issue in a cluster where Cilium was installed to restrict network access. Accessing specific AWS endpoints using the AWS CLI from a Kubernetes pod resulted in a failed response. Troubleshooting using Cilium, Hubble, Envoy, and tcpdump revealed that the Envoy proxy, responsible for terminating and re-originating the TLS traffic, was causing the issue and the lack of the TCP server_name extension was to blame. It closes with the issue resolution and validation.

Cilium is an open-source network and security solution for Kubernetes. Cilium provides features such as network segmentation, load balancing, service discovery, and security using Linux Kernel’s eBPF technology.

TLS visibility in Cilium refers to the ability of Cilium to inspect and monitor TLS traffic passing through the cluster. Cilium can inspect the contents of the encrypted traffic using the TLS protocol. This allows Cilium to extract information such as the certificate used, HTTP method, client-server identity & application protocol that is being used. This information can then be used to enforce security policies such as allowing or denying access based on the identity of the client or server or HTTP methods.

AWS S3 Multi Region Access Point

AWS S3 Multi-Region Access Point (MRAP) offers a global endpoint for routing AWS S3 request traffic between AWS regions. It provides a way for users to access Amazon S3 bucket data using a single Amazon Resource Name(ARN) and DNS hostname, regardless of the bucket’s physical location. This allows users to easily access data present in multiple S3 buckets from multiple regions without having to configure and manage multiple endpoint URLs.

More information on using this service can be found in the AWS docs.

Issue Overview

One of our clients uses AWS S3 MRAP to distribute data to its customers throughout the world. The data is accessed from workloads in Kubernetes clusters, where Cilium is installed to restrict network access via Cilium L7 filters. Below is a sample of the Cilium network policy installed on the Kubernetes cluster.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l7-visibility-tls"

spec:

description: L7 policy with TLS

endpointSelector:

matchLabels:

org: empire

class: mediabot

egress:

- toFQDNs:

- Match Pattern: *.s3.amazonaws.com

- Match Pattern: *.mrap.accesspoint.s3-global.amazonaws.com

toPorts:

- ports:

- port: "443"

protocol: "TCP"

terminatingTLS:

secret:

namespace: "kube-system"

name: "artii-tls-data"

originatingTLS:

secret:

namespace: "kube-system"

name: "tls-orig-data"

rules:

http: #enforce HTTP policies here

The above policy will only apply to pods with labels matching the endPointSelector, enforcing traffic destined for the hostnames in the toFQDNs list and matching the expressed http policies.

The rules.http, terminatingTLS, and originatingTLS sections inside the toPorts block indicate that TLS interception should be used to terminate the mediabot pod outbound TLS connection and initiate a new outbound TLS connection to MatchPattern endpoint.

We discovered that accessing AWS S3 MRAP endpoints using AWS CLI from a Kubernetes pod with these policies applied results in a 503 - service unavailable response.

Troubleshooting

Identifying Failure Conditions

We started troubleshooting by enabling AWS CLI Debug logs to connect to the MRAP endpoint. The debug logs below pointed to the Envoy proxy returning a 503 due to the response headers on the HTTP request. The Envoy proxy which is responsible for terminating and re-originating the TLS traffic appeared to be causing the issue.

% aws s3 ls s3://arn:aws:s3::<aws-accountnumber>:accesspoint/mraps3sample.mrap --debug

2023-02-01 21:45:19,932 - MainThread - urllib3.connectionpool - DEBUG - Starting new HTTPS connection (1): mraps3sample.mrap.accesspoint.s3-global.amazonaws.com:443

2023-02-01 21:45:24,057 - MainThread - urllib3.connectionpool - DEBUG - https://mraps3sample.mrap.accesspoint.s3-global.amazonaws.com:443 "GET /?list-type=2&prefix=&delimiter=%2F&encoding-type=url HTTP/1.1" 503 91

2023-02-01 21:45:24,057 - MainThread - botocore.parsers - DEBUG - Response headers: {'content-length': '91', 'content-type': 'text/plain', 'date': 'Wed, 01 Feb 2023 21:45:23 GMT', 'server': 'envoy'}

2023-02-01 21:45:24,057 - MainThread - botocore.parsers - DEBUG - Response body:

b'upstream connect error or disconnect/reset before headers. reset reason: connection failure'

2023-02-01 21:45:24,057 - MainThread - botocore.parsers - DEBUG - Exception caught when parsing error response body:

Next, we disabled the L7 policy detection, allowing the cilium-agent process to make the outbound decision strictly based on the outbound FQDN:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-pass-through"

spec:

description: L4 policy without TLS inspection

endpointSelector:

matchLabels:

org: empire

class: mediabot

egress:

- toFQDNs:

- Match Pattern: *.s3.amazonaws.com

- Match Pattern: *.mrap.accesspoint.s3-global.amazonaws.com

After eliminating the L7 enforcement, the request succeeded! This highlighted once again that the issue must be occurring within the cilium-envoy process. At this point, we have the below classification highlighting the specific conditions where the failure occurs:

| MRAP | Standard S3 | |

|---|---|---|

| TLS Interception | 503 - connect timeout | Success |

| Layer 4 Policies | Success | Success |

Now, we needed to dig deeper into the Layer 7 enforcement mechanism.

Enabling Cilium Envoy Debug Logs

Cilium Envoy debug logs can be enabled by adding the following command line argument for the Cilium agent on the Cilium Daemonset.

containers:

- args:

- --config-dir=/tmp/cilium/config-map

- --envoy-log=/tmp/envoy.log

- --debug-verbose=envoy

command:

- cilium-agent

After restarting the daemonset, we sent traffic through the proxy and captured a few important lines from the resulting debug log.

[2023-01-05 20:29:19.692][446][debug][router] [C4][S7205163356431684926] upstream reset: reset reason: connection failure, transport failure reason:

[2023-01-05 20:29:19.693][446][debug][http] [C4][S7205163356431684926] Sending local reply with details upstream_reset_before_response_started{connection_failure}

[2023-01-05 20:29:19.692][446][debug][pool] [C8] connect timeout

The upstream connection failure message on the debug logs meant the Envoy proxy couldn’t successfully connect to the MRAP endpoint. When the proxy is not able to connect to the upstream it generates its own 503 error. The error message once again confirmed the issue with Cilium Envoy TLS termination and origination. Our suspicion was that Envoy is doing something slightly different in the way it originates TLS that MRAP specifically doesn’t like, as TLS origination works fine for standard S3 endpoints.

Hubble

Hubble is a network observability and security solution that is built on top of Cilium. It provides a set of features that allow users to monitor, troubleshoot and secure the network traffic in a Kubernetes cluster.

Hubble allows users to see a detailed view of the network traffic in a Kubernetes cluster, including the source and destination of the traffic, the protocol being used, and the policies that are being enforced. This information can be used to troubleshoot issues, identify security threats, and ensure compliance with regulatory requirements. Given the issues we had encountered, we wanted to look at the Hubble UI to see the real-time traffic connecting to both MRAP endpoint & legacy S3 endpoint with TLS interception turned on.

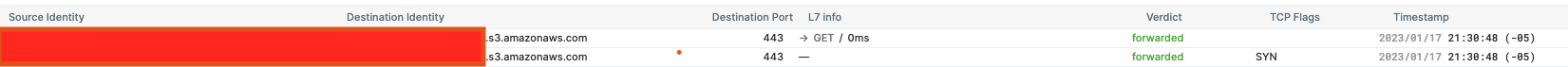

With TLS interception turned on, we looked at the service map in Hubble UI showing the traffic flow between the pod and legacy S3 bucket with Cilium able to get the L7 info. The L7 info contains fields such as the HTTP request, HTTP method, and other request headers.

On the traffic flow of MRAP, we couldn’t see requests with L7 info decoded. There was a request visible with the L4 data though.

Since Hubble is presenting no L7 telemetry for the failed requests, we couldn’t get any other concrete evidence about the request failure using this tool, so we moved on to looking at the Envoy admin console.

Envoy Admin Console

The Envoy admin console is a web-based user interface that allows users to view and manage the configuration and status of Envoy proxies in a system. The admin console provides a real-time view of the Envoy proxies and the traffic that they are handling, and it allows users to make configuration changes, view statistics and metrics, and troubleshoot issues.

Envoy is running as a process within Cilium and the Envoy admin console is exposed as a unix socket. We can use socat to map the unix socket to a TCP port and then port forward that port to our local machine. You can follow the below steps to access the Envoy admin console.

- Exec into Cilium pod

k exec -it cilium-8d7mt -n kube-system -- bash

- Install

socatto map the unix socket to a tcp port.apt update -y && apt install socat tcpdump -y

- Map

envoy-adminunix socket to a TCP portSocat TCP-LISTEN:12345,fork UNIX-CONNECT:/var/run/cilium/envoy-admin.sock

- Then kubectl port forward the TCP port to localhost

k port-forward cilium-8d7mt 12345:12345 -n kube-system

Using this admin console, we dumped the config, checked the cluster status, and examined the certs. The Cilium network policy mentioned earlier in the article outlines two secrets, one for TLS termination and one for TLS origination. We inspected those to check if the certificates are proper and we have included a wildcard SAN *.mrap.accesspoint.s3-global.amazonaws.com in the termination certificate to enable Cilium to terminate the connection properly.

Everything appeared healthy from this perspective, except for the inbound requests

to the TLS cluster having an increasing count of 503 responses!

curl Requests

Cilium Envoy terminates the initial TLS connection from the downstream and creating a new TLS connection to the upstream. The Envoy proxy must be told the set of CAs that it should trust when validating the new TLS connection to the destination service. More information on the Cilium certificate model can be found on Cilium docs. We wanted to inspect the correctness of the ca files that we were providing to Envoy via the Cilium network policy.

We downloaded the data from the cluster and Envoy proxy and executed curl requests from our local machine using these files against the MRAP endpoint and verified that the CA file given was valid.

curl -vvv 'https://mraps3sample.mrap.accesspoint.s3-global.amazonaws.com/?list-type=2&prefix=&delimiter=%2F&encoding-type=url' --cacert ca.crt

...

* (304) (OUT), TLS handshake, Finished (20):

...

< HTTP/2 401

While we have some layer 7 outbound requests working correctly, it may have been possible that there was an unknown error with TLS decryption hidden in the Cilium Envoy filters. This did not appear to be the case in the Envoy logs and we confirmed that the TLS interception worked properly in requests directly to this endpoint.

tcpdump

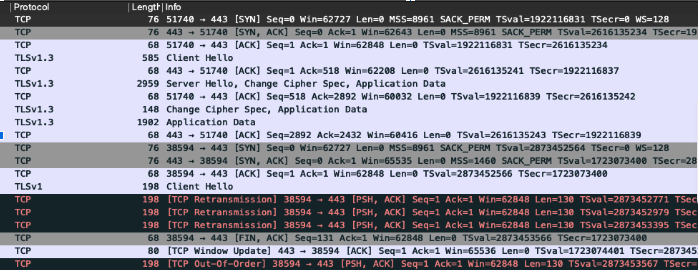

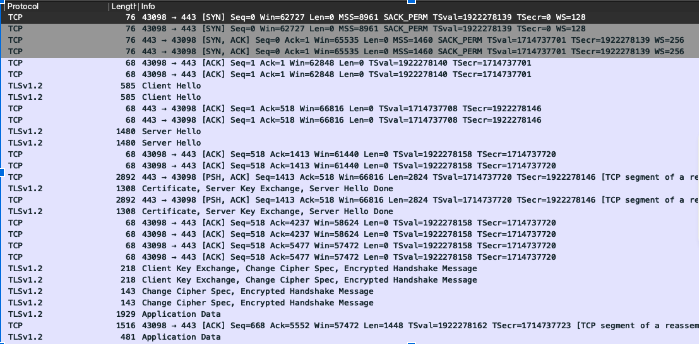

We wanted to see what was different between when AWS cli established a TLS connection vs when Cilium Envoy did the TLS origination. We decided to use tcpdump to gather packet data to compare the traffic we make the connection to MRAP with TLS inspection enabled vs when it was disabled.

tcpdump -n host mraps3sample.mrap.accesspoint.s3-global.amazonaws.com -i any -p pcapfile.pcap

The pcap files provided many insights into the traffic, TLS version, and SNI pre-amble.

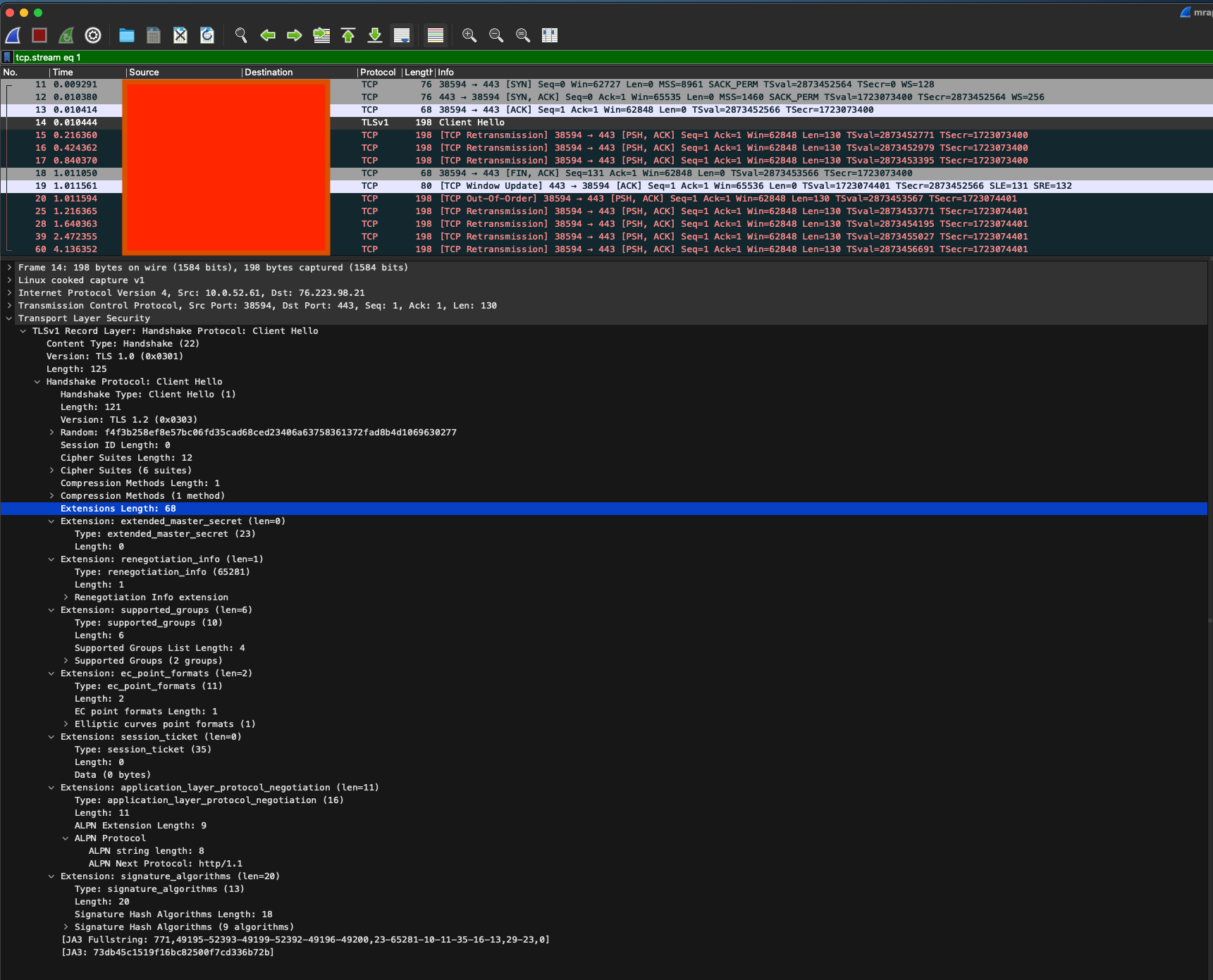

There was no SNI information on the failed connections. In the working requests, we could see the SNI information correctly in the packet capture. Let’s take a look at the PCAP file in Wireshark when the request fails:

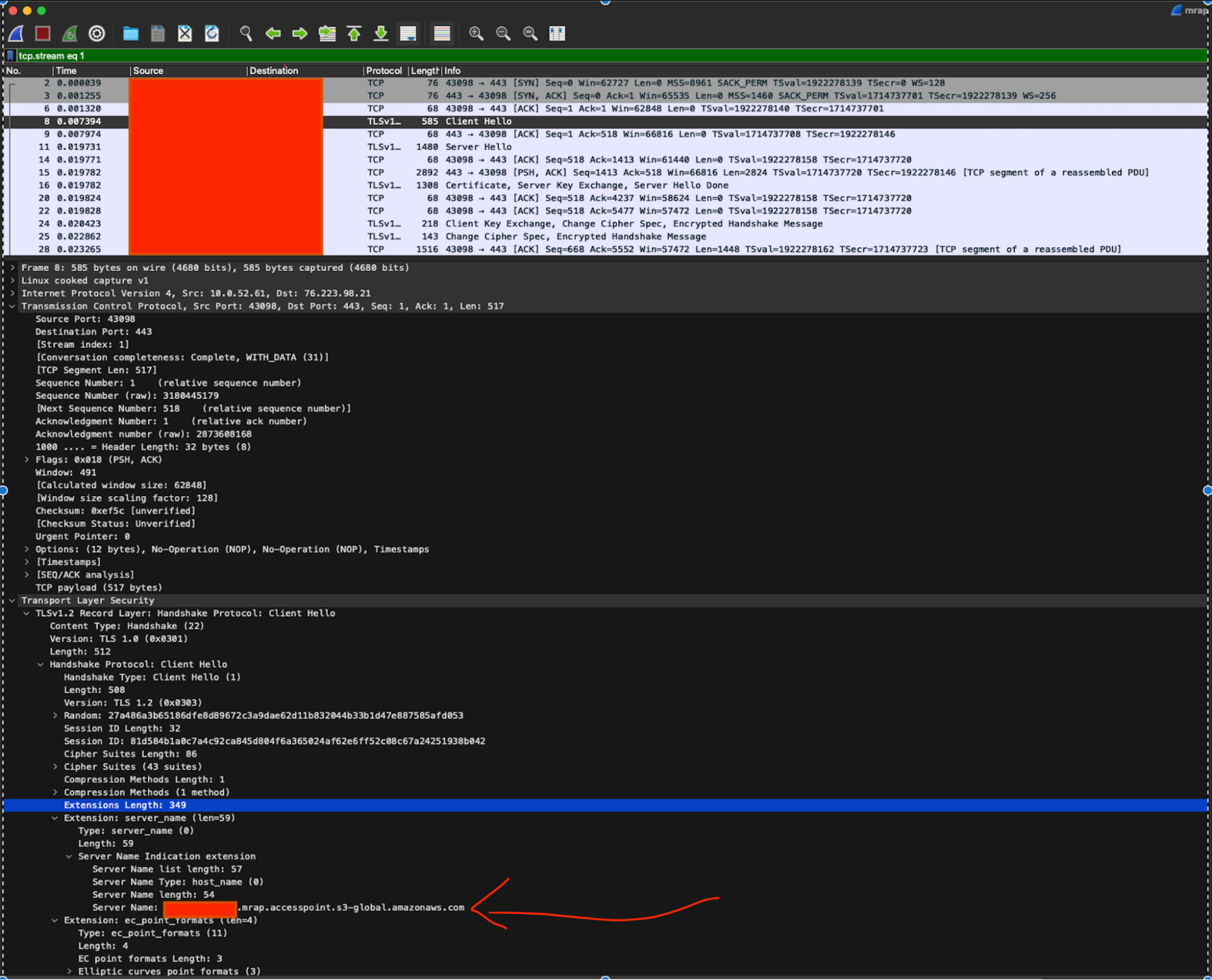

SNI is an extension, so let’s take a look at a successful connection PCAP in Wireshark and see the server_name extension.

Looking at these two packet capture outputs in Wireshark allows us to see the difference, the lack of the Server Name Indication extension on the packet.

Without this extension, requests to AWS backends are not able to be routed correctly by the AWS network infrastructure as the required information is encrypted. The router can only act on layer 4 information and so rejects the TCP connections before they proceed to the backend. This is why we never see a TLS Client Hello packet from the MRAP backends in these failed requests.

Fix

We sent all of our findings to Cilium maintainers and they were able to identify the issue of not passing the SNI metadata to upstream for TLS connections. The fix is in this pull request. In this patch, the AutoSni and AutoSanValidation flags are added to the Envoy configuration for TLS inbound and outbound requests so that the server_name extension is always passed.

We installed the patched Cilium to our Kubernetes cluster and we were able to successfully connect to S3 MRAP with TLS visibility. We took a tcpdump after the patch installation and we could see the SNI information as expected. The correct SNI information is seen on the outbound request after the TLS downgrade request succeeded.

Looking deeper into the PR, we see a related change in the Cilium Envoy filters code. Looking at this commit, we see that the SNI information passed to the filter state before initiating the connection to the upstream. While this change doesn’t help us identify the cause of the missing SNI headers, providing that differentiation between the results for S3 and MRAP S3, it has resolved the issues by ensuring that if SNI information is present in the request from the downstream, it will be attached to the originated TLS upstream request.

Conclusion

We now have our Layer 7 policies enforcing outbound http rules for S3 MRAP endpoints! We’re glad to have this issue behind us, but continue to utilize the tools in our toolbelt to identify and resolve issues in our environment.

This post explores a number of tools for debugging TLS visibility in Cilium and Envoy. Feel free to reach out at hello@superorbital.io to discuss how you are using these tools in your Kubernetes environment.

Our team enjoys working with the Cilium open-source community and the Isovalent team and appreciates the contributions they have made to the Kubernetes community as a whole.