Published on May 04, 2022

Table of Contents

Overview

Superorbital’s focus on delivering high-quality custom engineering services to our clients, means that we are always on the lookout for new offerings in the marketplace that can supplement and improve the solutions that we are recommending and building with our clients. In March of 2022, while working on an engineering engagement with EBSCO to help them update and streamline their CI/CD and Kubernetes workflows, we became aware of the aws-sample/aws-eks-accelerator-for-terraform Github project. It is an AWS1 managed project to document a Terraform pattern for creating EKS2 clusters that follow AWS best practices and can easily be bootstrapped with common Kubernetes tools, utilizing Helm and/or ArgoCD. We were immediately intrigued by this project as it mapped very closely to some conversations and recommendations that we had been recently having with the engineering leadership at EBSCO.

We made the decision to go ahead and implement a thorough proof-of-concept utilizing the project, to see what it was capable of, and contribute what we could back to the effort.

This post covers some of what followed and introduces a new Github repository that we assembled which makes it easier for others to quickly explore this project and its capabilities.

The Project

The approach that AWS has taken with this project is to create a top-level Terraform module that can be used to spin up an EKS cluster that is flexible while still following many of AWS’s best practices, including least-privilege access between nodes, encryption, and more.

The progress they have made is great. There are still some rough edges and design choices that have to be considered, as we found out along the way. Luckily the maintainers have been great to work with and are very responsive to issues and contributions.

On April 20th, 2022, at the AWS Summit San Francisco, AWS publicly announced the project via a conference session and blog post. The project was also renamed and moved to its “permanent” home on Github at aws-ia/terraform-aws-eks-blueprints.

A Quick Start

If you are interested in exploring this project and would like a quick start, we have put together the superorbital/aws-eks-blueprint-examples Github repository that utilizes the AWS blueprints and can be easily used to give the project a test.

Basic Walk Through

1) By default, the AWS Terraform provider in this repo is expecting a profile named sandbox to exist in ${HOME}/.aws/credentials. You can override this if you want.

The simplest setup in ${HOME}/.aws/credentials would look something like the following and be connected to an IAM role that has administrator access inside of the owning AWS account.

[sandbox]

aws_access_key_id=REDACTED

aws_secret_access_key=REDACTED

region=us-west-2

- NOTE: Administrator access is not required, it is simply the easiest thing to start with for testing.

2) Now let’s check out the Terraform code and scan through the README.

git clone https://github.com/superorbital/aws-eks-blueprint-examples.git

cd aws-eks-blueprint-examples

cat README.md

3) Spin up a new VPC (~2 minutes)

- Read through the Terraform code for the network.

cd ./accounts/sandbox/network/primary/

terraform init

terraform plan

terraform apply # -auto-approve

4) Spin up a new EKS cluster (~15 minutes)

- Read through the Terraform code for the EKS cluster.

cd ../../eks/poc/

terraform init

terraform plan

terraform apply # -auto-approve

5) Make sure that you can connect to the new EKS cluster

aws --profile=sandbox eks update-kubeconfig --region us-west-2 --name the-a-team-sbox-poc-eks

kubectl get namespaces

6) Bootstrap Core Kubernetes Software (~2 minutes)

- Read through the Terraform code for the Kubernetes deployments.

cd ../../k8s/poc/

terraform init

terraform plan

terraform apply # -auto-approve

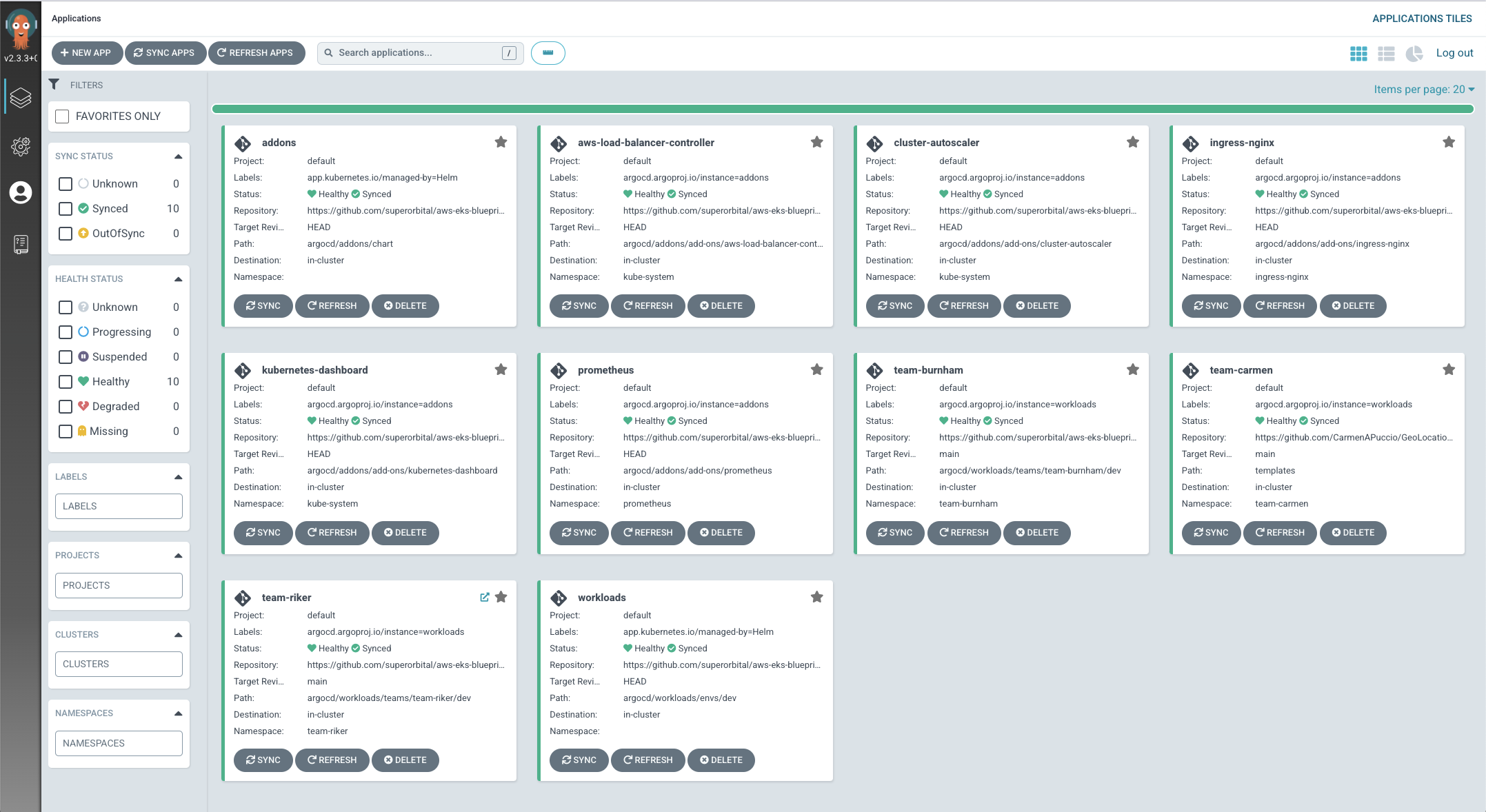

7) Explore the ArgoCD UI

- Retrieve the randomly-generated default ArgoCD admin password

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo

- Forward a local port to the ArgoCD Server service

kubectl port-forward -n argocd svc/argo-cd-argocd-server 8080:443

- And then open up a web browser and navigate to

https://127.0.0.1:8080- username: admin

-

password: use the output from the above

get secretcommand

1) You can see how to connect to some of the other installed add-ons and workflows by checking out the README.

The Results

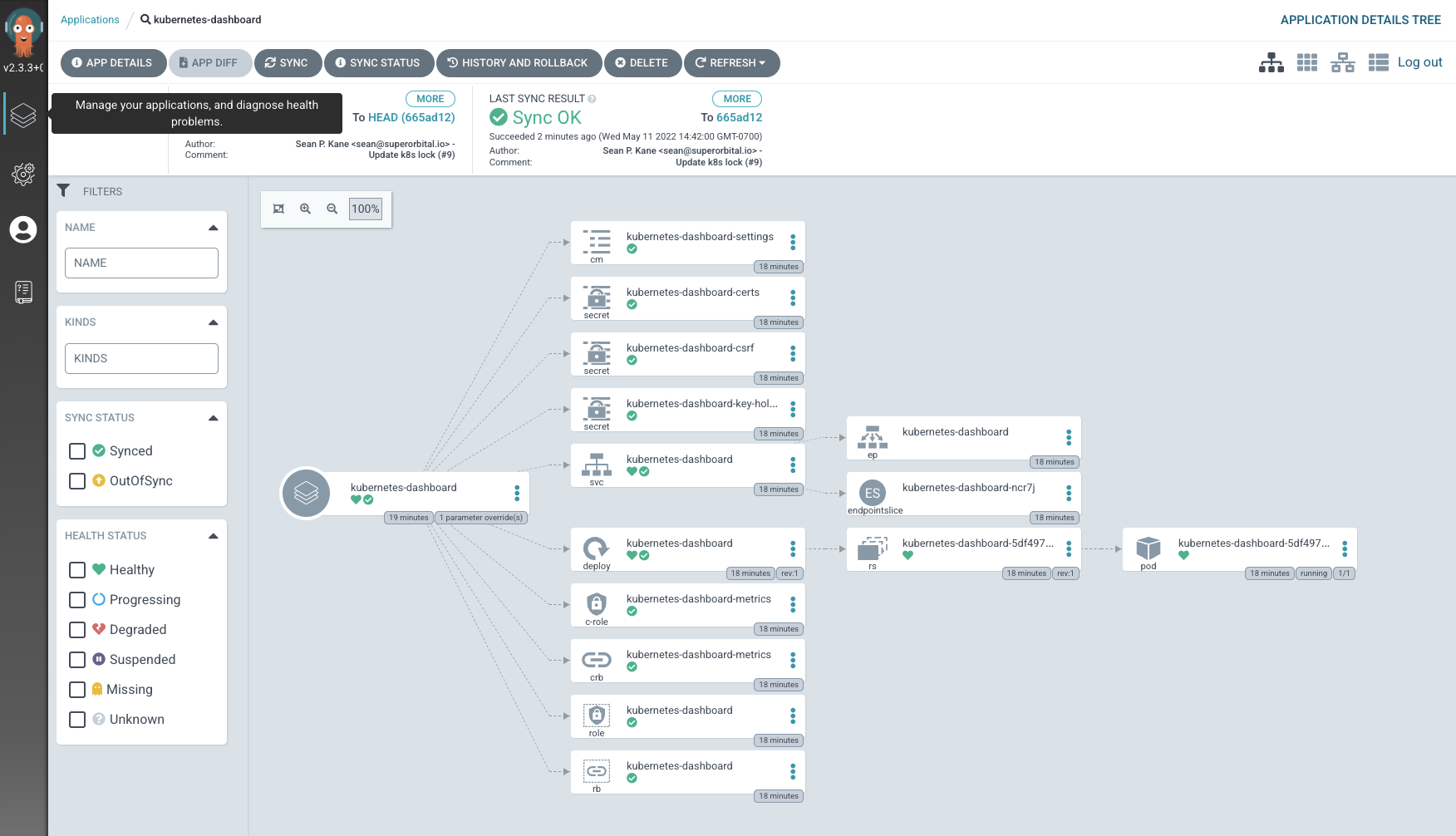

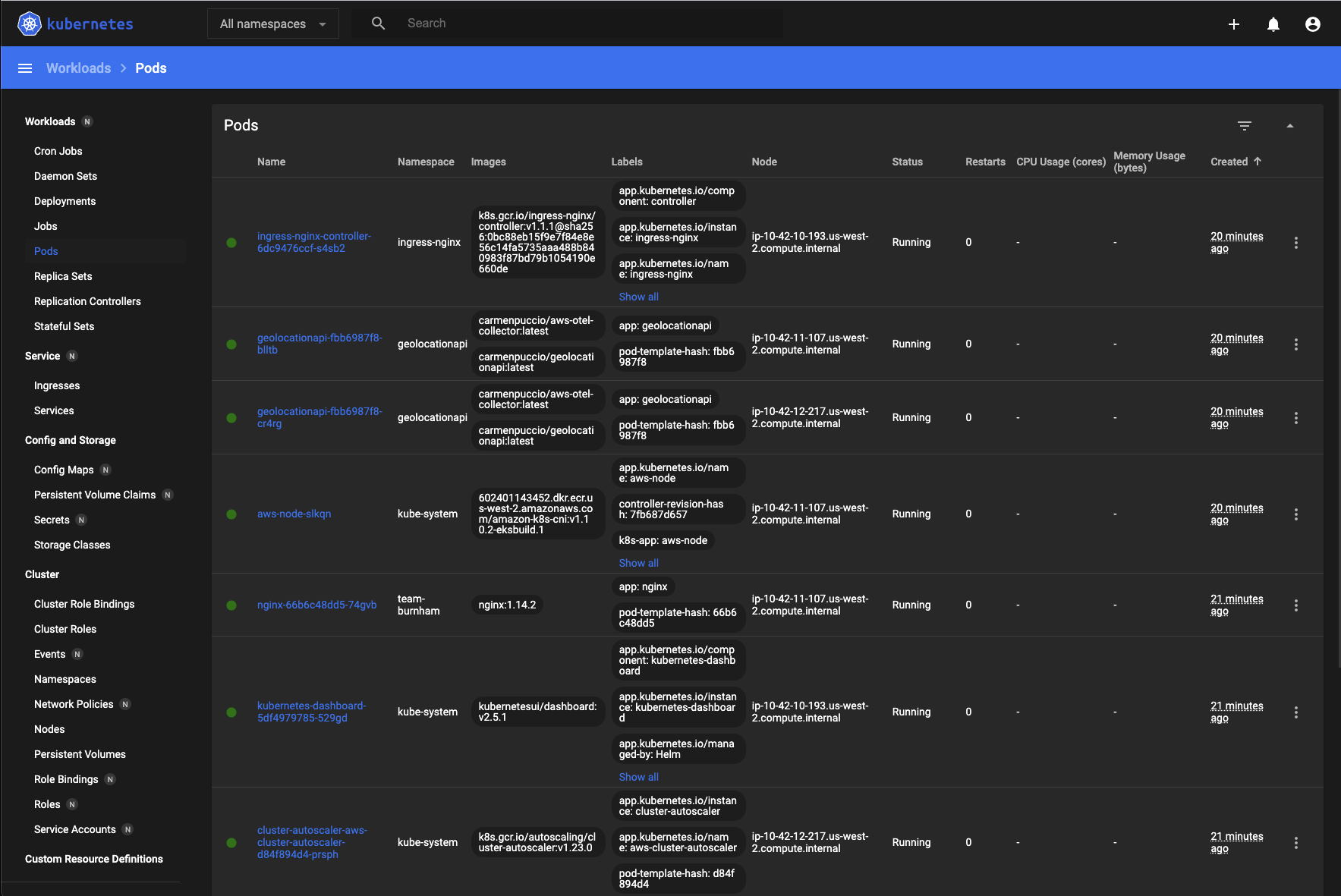

By applying these 3 terraform modules, we have gone from a potentially empty AWS account to an EKS cluster that already contains some core Kubernetes software that can easily be targeted by an existing CI/CD pipeline.

The aws-ia/terraform-aws-eks-blueprints repository provides a couple of approaches to managing the software inside of the Kubernetes cluster.

EKS Add-ons

The project has a concept of EKS add-ons that maps directly to the upstream AWS EKS Add-ons and gives you a very easy way to manage which version of these various tools you want to be installed in your cluster. You can see a list of EKS add-ons and which versions work with which K8s versions by running aws --profile=sandbox eks describe-addon-versions.

Kubernetes Add-ons

All other Kubernetes software falls into the category of Kubernetes add-ons. The project comes with a list of currently supported add-ons, but these will grow over time, and there is nothing preventing people from utilizing their own forked repo so that they can more easily add and remove add-ons that they want to use in their environment.

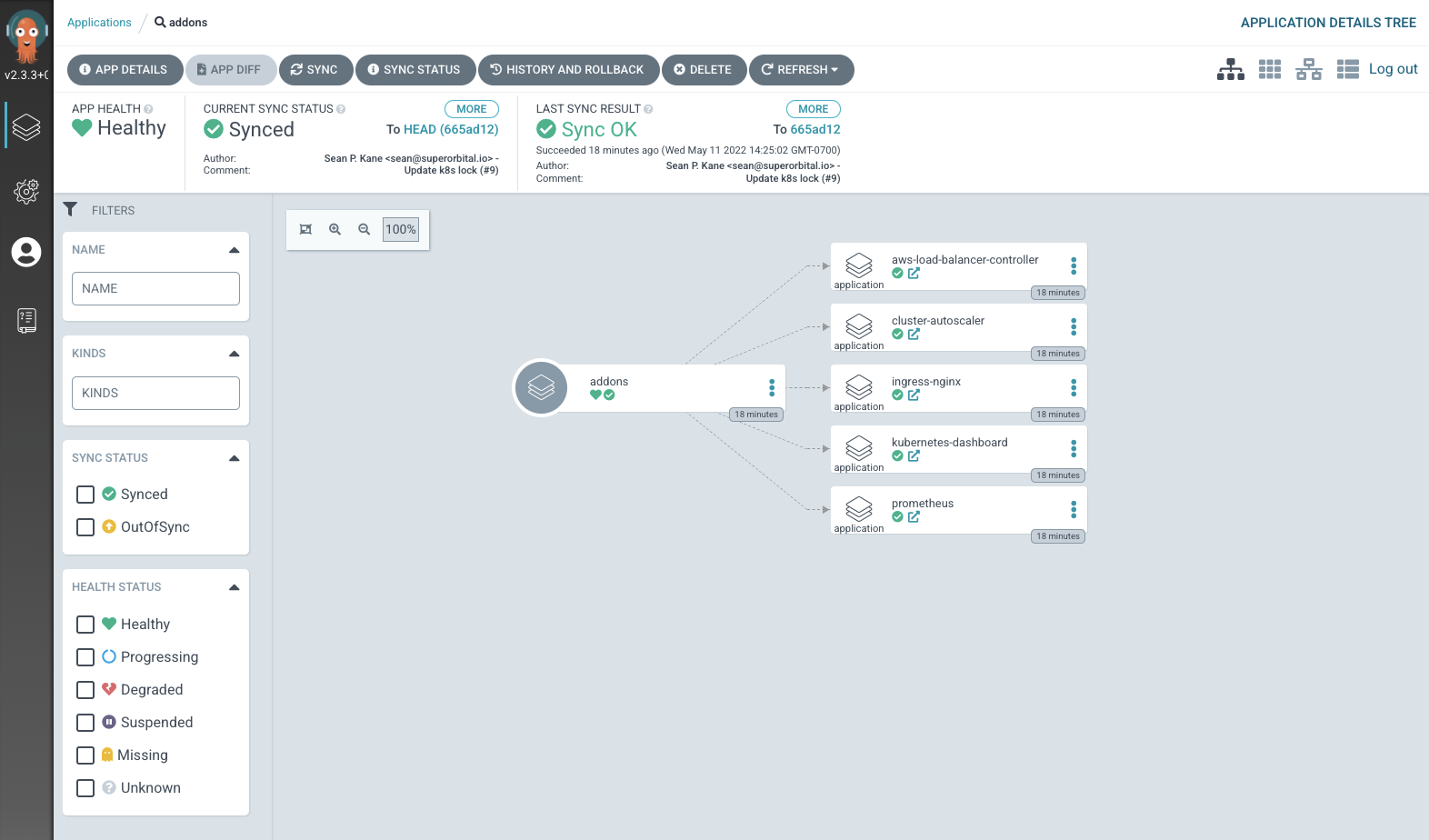

These add-ons can be installed into the cluster in a few ways. If you are not interested in using ArgoCD to manage these add-ons, then Terraform can simply install them all using using Helm. However, if you want to install ArgoCD and use it to manage these add-ons, then a slightly different approach is taken.

When you set it up this way:

enable_argocd = true

argocd_manage_add_ons = true

argocd_applications = {

addons = {

path = "/chart"

// This should usually point at an internally owned and managed repo.

repo_url = "https://github.com/aws-samples/eks-blueprints-add-ons"

project = "default"

add_on_application = true // This indicates the root add-on application.

}

}

Then ArgoCD is the only add-on installed via helm and an initial ArgoCD Application is created which follows the App of Apps pattern and will be setup to install all the other add-ons that have been enabled in Terraform.

The process that enables this to work via Terraform is a bit involved, but the workflow ends up being a great way to get a fully working cluster bootstrapped utilizing Terraform, as a single tool.

Everything Else

In general, our recommendation is to avoid using Terraform to install software into a Kubernetes cluster, however, this workflow is a very nice compromise, since we can limit Terraform’s goal to simply getting the cluster into a state where ArgoCD can take over and manage everything else.

Once ArgoCD is online, you can install anything you want using a very robust GitOps workflow.

- Love

helmand what to use it to manage and install all your applications? No problem. - Prefer

kustomizeand the flexible overlays that it provides? Easy. - Need a way to simply

kubectl applysome pre-baked manifest? ArgoCD has you covered.

Via the Terraform code, it is easy to point ArgoCD at some additional repositories which can then be used to install additional tools and services that you need inside your cluster.

argocd_applications = {

addons = {

path = "/chart"

// This should usually point at an internally owned and managed repo.

repo_url = "https://github.com/aws-samples/eks-blueprints-add-ons"

project = "default"

add_on_application = true // This indicates the root add-on application.

},

workloads = {

path = "/envs/dev"

repo_url = "https://github.com/aws-samples/eks-blueprints-workloads"

values = {}

}

}

At this point, it is also easy to ignore Terraform completely and just use ArgoCD exactly as you would in any other setup.

Exploring the Blueprints

We have high hopes for the long-term success of this effort by AWS and really hope that it encourages other vendors to support their stacks in a similar way.

As we have dug into the project, we have learned a few things, while also helping AWS ensure that the project is flexible enough to be used in a variety of ways, despite its naturally opinionated nature.

The two biggest issues that we ran into early on were:

KMS Key Administration

The project uses KMS3 for the default data encryption, but only gives two roles complete access to the very secure KMS key. This is not necessarily a huge issue on the surface, but it is quite possible to get into trouble during testing because if those roles get deleted somehow, the KMS key will be unmanageable and un-deletable via Terraform or the AWS console, without root access to the AWS account and manual intervention from the AWS support team, which can take a week or more to resolve.

To help improve this situation we submitted a small feature PR4 that made it possible for users to add additional roles that should have full access to the KMS key policy using a new cluster_kms_key_additional_admin_arns variable.

Managed Node Groups Failing to Create

The other big issue that we ran into turned out to be a bit more tricky and took some investigation to track down. Even AWS support was a bit stumped at first.

When we tried to spin up a managed node group, we would see the nodes join the Kubernetes cluster just fine, but the node group would never become healthy. If you are interested, you can read the details in the above issue, but it basically turned out that we were the first users to set create_launch_template = true without also providing a custom ami_id. This is a perfectly valid setup but had been overlooked in testing.

In the end, it was possible to make some changes in the blueprints repo to fix the issue locally and then provide some additional feedback to AWS to hopefully improve the overall user experience.

- When replaying user-data in testing will bail user-data when strict due to moving files…

- EKS: Improve Health checking if Managed Nodegroup failed to join EKS cluster at creation stage

The Road Forward

We are continuing to actively work on this project in coordination with AWS and our engineering client, EBSCO. While our focus is on solving the engineering challenges that our client has been facing, we think that it is important to be an active participant in the broader open source ecosystem and to provide as many contributions and actionable feedback to maintaining teams as possible so that the resulting improvements can be enjoyed by everyone.

- Looking For Engineering Support?

- SuperOrbital has a team of experienced engineers who can engage with your teams and help accelerate your production operations projects. In addition, we provide in-depth workshops on many of the core technologies that modern computer engineer teams need to be comfortable with. If this sounds helpful, please reach out to us.

- Looking for Career Opportunities?

- If you are experienced with operating and developing for Kubernetes, Terraform, and Linux containers, and this work sounds interesting to you then please consider applying.